Scaling Microservices Without Losing Observability and Control

Microservices promise agility, resilience, and the ability to scale components independently, breaking down monolithic applications into manageable, autonomous services. It’s an enticing architectural vision, but one that introduces a significant challenge: how do you maintain a watchful eye and a firm grip on your system when it’s composed of dozens, even hundreds, of distributed components? The journey of Scaling Microservices Without Losing Observability and Control is not just about adding more instances; it’s about strategically building the right infrastructure and practices to manage complexity.

As your microservices architecture grows, the sheer number of moving parts can quickly overwhelm traditional monitoring tools and manual oversight. Requests traverse multiple services, data flows through various queues, and a single issue can have cascading effects that are incredibly difficult to pinpoint. Without robust observability and well-defined control mechanisms, scaling can quickly lead to a loss of visibility, increased downtime, and developer burnout.

The Pillars of Observability: Logs, Metrics, and Traces

True observability goes beyond simple monitoring; it’s the ability to infer the internal state of a system by examining its external outputs. For microservices, this relies heavily on three core pillars:

- Centralized Logging: Every service, regardless of its function or language, must funnel its logs to a central, searchable platform. This isn’t just about collecting data; it’s about standardizing log formats, enriching logs with correlation IDs (to link events across services), and providing powerful querying capabilities. Tools like ELK Stack (Elasticsearch, Logstash, Kibana) or Splunk are invaluable here.

- Granular Metrics: Beyond basic CPU and memory usage, collect application-specific metrics. Think about request rates, error rates per endpoint, latency percentiles, queue depths, and business-level metrics specific to each service. Prometheus with Grafana, Datadog, or New Relic can provide real-time dashboards and alerting based on these critical indicators.

- Distributed Tracing: This is arguably the most crucial for understanding the flow of a single request across multiple services. A distributed tracing system assigns a unique ID to a request at its entry point and propagates it through every service it touches. This allows you to visualize the entire request path, identify bottlenecks, and pinpoint exactly which service failed or introduced latency. OpenTelemetry, Jaeger, and Zipkin are leading solutions in this space.

Regaining Control: Automated Processes and Governance

Observability tells you what is happening; control helps you steer the ship. As you scale, manual interventions become impractical and error-prone. Automation and clear governance are paramount.

Automated Deployment and Configuration Management

Consistency across environments and services is key. Implement robust CI/CD pipelines that automate testing, deployment, and rollback processes. Use infrastructure as code (IaC) tools like Terraform or Pulumi to provision resources and configuration management tools like Ansible or Chef to manage service configurations. This reduces human error and ensures that every service instance adheres to predefined standards.

Service Discovery and API Gateways

With dozens of services coming online and going offline, dynamic service discovery (e.g., Consul, Eureka, Kubernetes’ built-in DNS) is essential. An API Gateway acts as the single entry point for all client requests, handling routing, load balancing, authentication, and rate limiting, reducing the complexity for individual services.

Clear Communication and Standards

While not strictly a technical tool, a lack of communication can derail scaling efforts. Establish clear API contracts, naming conventions, and architectural guidelines. Regular code reviews and knowledge sharing sessions help ensure consistency and prevent silos from forming.

Proactive Strategies for Resilience

The best way to maintain control is to anticipate problems before they occur.

Performance Monitoring and Alerting

Set up intelligent alerting based on your metrics and logs. Don’t just alert on “service is down”; alert on deviations from normal behavior, increased error rates, or degraded latency. Implement PagerDuty or Opsgenie for effective incident management, ensuring the right team is notified immediately.

Chaos Engineering

Deliberately inject failures into your system in a controlled manner to uncover weaknesses and build resilience. Tools like Netflix’s Chaos Monkey or Gremlin can help you simulate network latency, service outages, or resource exhaustion, forcing your teams to build more robust and fault-tolerant services from the start.

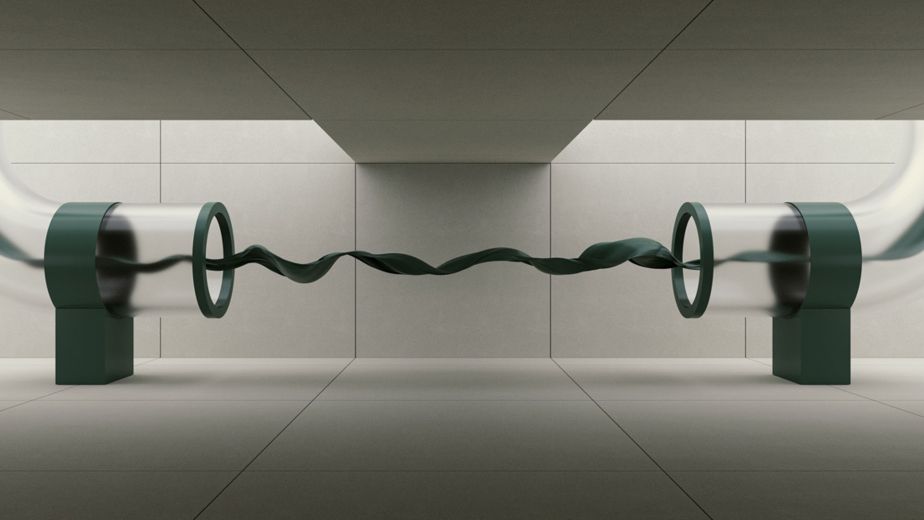

The Role of a Service Mesh

For highly complex microservices environments, a service mesh (e.g., Istio, Linkerd) can abstract away much of the networking and observability complexity. It provides capabilities like traffic management, security policies, and built-in distributed tracing and metrics collection without requiring changes to service code, centralizing many control and observability functions.

Successfully scaling microservices isn’t just about adding more instances; it’s about meticulously building an infrastructure that provides deep insight and firm control. By prioritizing robust observability and implementing intelligent control mechanisms from the outset, you can navigate the complexities of a distributed system, ensuring your applications remain performant, resilient, and manageable. The journey of Scaling Microservices Without Losing Observability and Control is continuous, but with the right practices and tools, it leads to a truly agile and robust architecture capable of meeting future demands.